- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Highlight

- Report Inappropriate Content

KD55XD8005BU 8bit or 10bit.

Hi,

Someone at work mentioned PS4 Pro gaming (I have) on HDR tv's and there is a difference between 8bit and 10bit. (1million / 16million pixels ?)

Please can someone tell me what my tv is, model KD55XD8005BU

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Highlight

- Report Inappropriate Content

Aah ok cool. Thank you for replying.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Highlight

- Report Inappropriate Content

If you can't discern colors from pixels, you probably shouldn't worry.

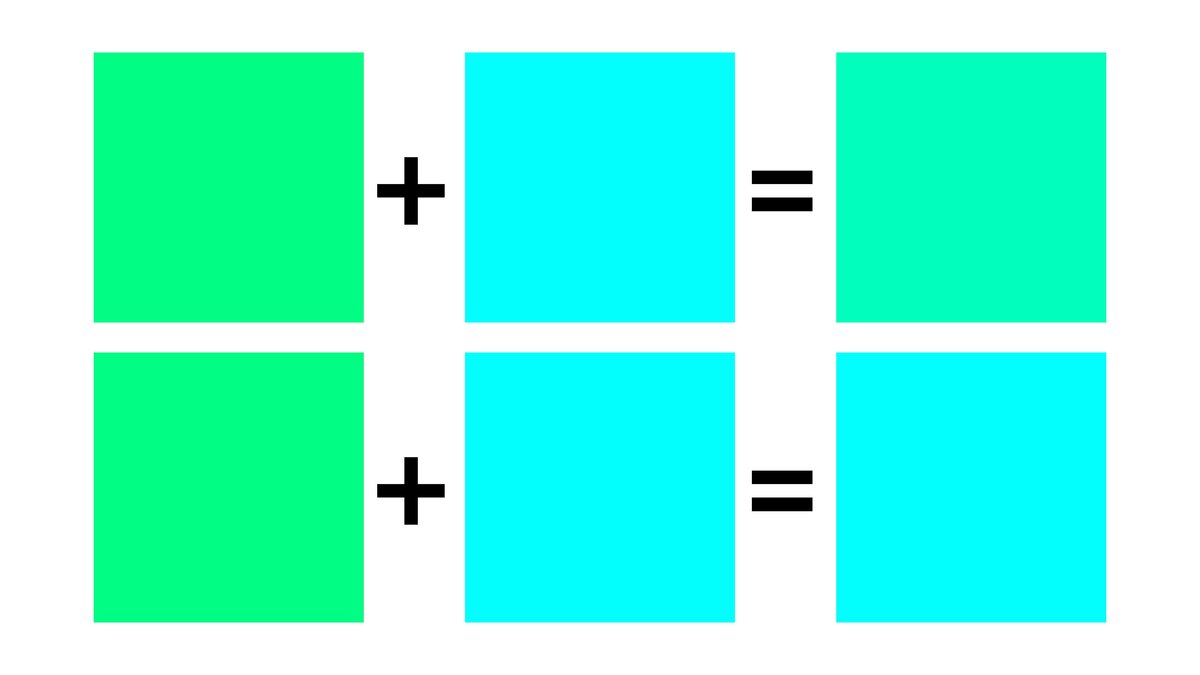

Your panel is 8-Bit + FRC. Does this help you?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Highlight

- Report Inappropriate Content

Basically it's a "virtual 10 bit" (64 billion colours instead of 4 billion). The higher colour range is achieved through an optical effect. Personally I do appreciate very much the difference (in SDR. Not so much, or better, not always, in HDR). The picture seems much nicer.

Regarding the HDR I have read this about FRC in Wikipedia:

FRC tends to be most noticeable in darker tones, while dithering appears to make the individual pixels of the LCD visible.

Could it be what causes the fog/washed effect in many HDR dark scenes? Which is totally absent in Netflix's Star Trek Discovery (and the TV does show the HDR logo). Is there a chance that Netflix other than downscaling the resolution of the TV Show to 1080p, is also using a sort of "HDR8"? If such a thing even exist, sure.